- #Download google deep dream genereator for mac movie#

- #Download google deep dream genereator for mac update#

- #Download google deep dream genereator for mac download#

- #Download google deep dream genereator for mac free#

#Download google deep dream genereator for mac update#

# You can update the image by directly adding the gradients (because they're the same shape!) # In gradient ascent, the "loss" is maximized so that the input image increasingly "excites" the layers. Gradients /= tf.math.reduce_std(gradients) + 1e-8 # Calculate the gradient of the loss with respect to the pixels of the input image. # `GradientTape` only watches `tf.Variable`s by default Tf.TensorSpec(shape=, dtype=tf.float32),)ĭef _call_(self, img, steps, step_size): See the Concrete functions guide for details. It uses an input_signature to ensure that the function is not retraced for different image sizes or steps/ step_size values. The method that does this, below, is wrapped in a tf.function for performance. At each step, you will have created an image that increasingly excites the activations of certain layers in the network. Once you have calculated the loss for the chosen layers, all that is left is to calculate the gradients with respect to the image, and add them to the original image.Īdding the gradients to the image enhances the patterns seen by the network. # Converts the image into a batch of size 1. # Pass forward the image through the model to retrieve the activations.

In DeepDream, you will maximize this loss via gradient ascent. Normally, loss is a quantity you wish to minimize via gradient descent. The loss is normalized at each layer so the contribution from larger layers does not outweigh smaller layers. The loss is the sum of the activations in the chosen layers. Layers = ĭream_model = tf.keras.Model(inputs=base_model.input, outputs=layers) # Maximize the activations of these layers

#Download google deep dream genereator for mac free#

Feel free to experiment with the layers selected below, but keep in mind that deeper layers (those with a higher index) will take longer to train on since the gradient computation is deeper. Deeper layers respond to higher-level features (such as eyes and faces), while earlier layers respond to simpler features (such as edges, shapes, and textures). Using different layers will result in different dream-like images. There are 11 of these layers in InceptionV3, named 'mixed0' though 'mixed10'. For DeepDream, the layers of interest are those where the convolutions are concatenated. The InceptionV3 architecture is quite large (for a graph of the model architecture see TensorFlow's research repo). The complexity of the features incorporated depends on layers chosen by you, i.e, lower layers produce strokes or simple patterns, while deeper layers give sophisticated features in images, or even whole objects. The idea in DeepDream is to choose a layer (or layers) and maximize the "loss" in a way that the image increasingly "excites" the layers. Note that any pre-trained model will work, although you will have to adjust the layer names below if you change this. You will use InceptionV3 which is similar to the model originally used in DeepDream. Original_img = download(url, max_dim=500)ĭisplay.display(display.HTML('Image cc-by: Von.grzanka'))ĭownload and prepare a pre-trained image classification model. # Downsizing the image makes it easier to work with. Image_path = tf._file(name, origin=url)ĭisplay.display((np.array(img)))

#Download google deep dream genereator for mac download#

# Download an image and read it into a NumPy array.

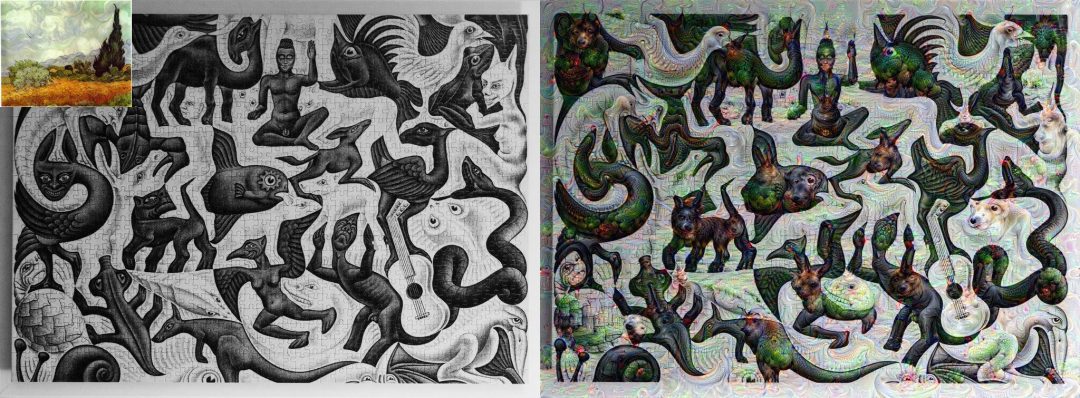

Let's demonstrate how you can make a neural network "dream" and enhance the surreal patterns it sees in an image.įrom import imageįor this tutorial, let's use an image of a labrador.

#Download google deep dream genereator for mac movie#

This process was dubbed "Inceptionism" (a reference to InceptionNet, and the movie Inception). The image is then modified to increase these activations, enhancing the patterns seen by the network, and resulting in a dream-like image. It does so by forwarding an image through the network, then calculating the gradient of the image with respect to the activations of a particular layer. Similar to when a child watches clouds and tries to interpret random shapes, DeepDream over-interprets and enhances the patterns it sees in an image. This tutorial contains a minimal implementation of DeepDream, as described in this blog post by Alexander Mordvintsev.ĭeepDream is an experiment that visualizes the patterns learned by a neural network.

0 kommentar(er)

0 kommentar(er)